Simplifying Similarity Problem: Introduction to Siamese Neural Networks

Learn how a machine learning model is created using few images per class.

Apart from Classification or Regression problems, there exists a third type of problems called as similarity problems in which we have to find out if two objects are similar or not. The amount of data required to train such networks is also not huge as compared to the other two problems.

Similarity learning is an area of supervised machine learning in which the goal is to learn a similarity function that measures how similar or related two objects are and returns a similarity value. A higher similarity score is returned when the objects are similar, and a lower similarity score is returned when the objects are different.

Before we jump into the article, let’s understand Classification or Regression problems with examples.

Suppose you want to train a model that can recognize images of dogs, cats and rats. For this, you have to obtain a labelled dataset containing images of dogs, cats and rats. After training the model, upon giving any input image the network can only output labels as dog or cat or rat. This is a standard computer vision problem known as Image Classification.

Suppose you will have to collect data for all the sales of properties in a particular area you are interested in. Now after training the model, you will get an output that has a continuous value, since the price is a continuous value. This is Regression.

Siamese Neural Networks

In the modern Deep learning era, Neural networks are almost good at every task, but these neural networks rely on more data to perform well. But, for certain problems like face recognition and signature verification, we can’t always rely on getting more data. To solve these kinds of tasks, we have a new type of neural network architecture called Siamese Neural Networks.

A Siamese neural network (sometimes called a twin neural network) is an artificial neural network that contains two or more identical subnetworks which means they have the same configuration with the same parameters and weights. Usually, we only train one of the subnetworks and use the same configuration for other sub-networks. These networks are used to find the similarity of the inputs by comparing their feature vectors.

Talking about deep neural networks, they not only need a large volume of data to train on but also, they have to be updated and re-trained to add/remove new classes to the dataset. This can be avoided if we use SSNs.

The biggest upper hand of using SNNs (Siamese Neural Networks) is that it learns a similarity function. Thus, we can train it to see if the two images are the same. This enables us to classify new classes of data without training the network again.

Since training of SNNs involves pairwise learning usual, Cross entropy loss cannot be used in this case, mainly two loss functions are mainly used in training these Siamese Neural Networks.

1. Contrastive Loss

It is a popular loss function highly used nowadays. It is a distance-based loss as opposed to more conventional error-prediction losses. This loss is used to learn embeddings in which two similar points have a low Euclidean distance and two dissimilar points have a large Euclidean distance. But using this loss function we cannot learn ranking which means we are not able to say how much two pairs are similar to each other. It can be given as:

L(A,B)= y|| F(A) - F(B) ||2 + (1-y)max(0,m2 - || F(A) - F(B) ||2)2. Triplet loss

Using contrastive loss, we were only able to differentiate between similar and different images but when we use triplet loss, we can also find out which image is more similar when compared with other images. In other words, the network learns ranking when trained using triplet loss.

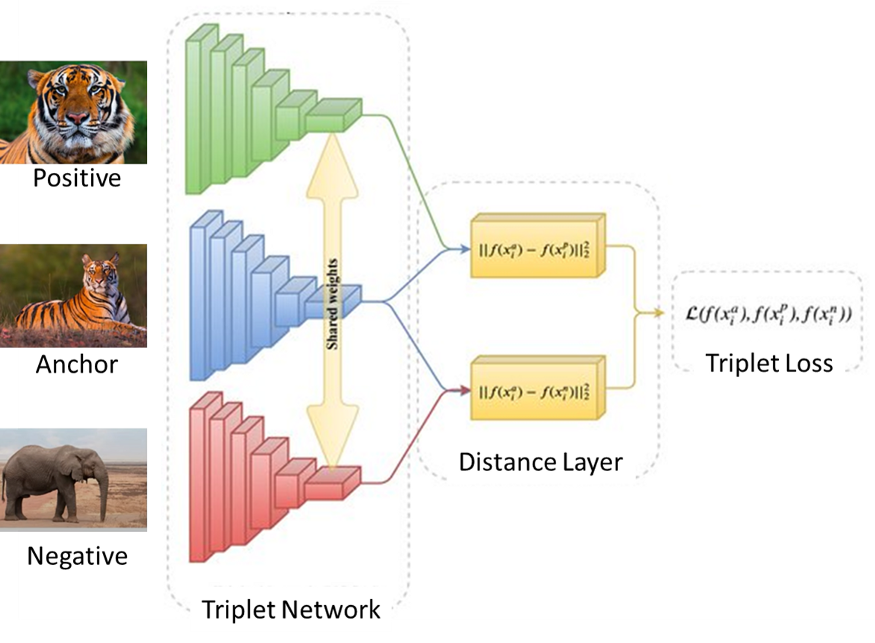

When using triplet loss, we no longer need positive and negative pairs of images. We need triplets of images in which we have an anchor image, a positive image that is similar to anchor image and a negative image that is very different from the anchor image as shown below:

It is a loss function where an anchor input is compared to a positive input and a negative input. The distance from the anchor input to the positive input is minimized, and the distance from the anchor input to the negative input is maximized. It can be given as:

L(A,P,N)= max(0, || F(A) - F(P) ||2 < || F(A) - F(N) ||2 +m)

[where m is a margin]When the positive image is closer to the anchor image than the negative image than the function returns zero and there is no loss. But when the negative image is closer to the anchor than the positive image, we are bringing a positive image closer to the anchor image in terms of distance.

The distance between the positive image and anchor image should be smaller as compared to the distance between anchor image and negative image up to a margin m.

Real-world Applications of SNNs

Examples include, but are not limited to:

Facial Recognition

Signature Verification

Banknote Fraud Detection

Audio Classification

Advantages

As SNNs learn a similarity function, it can be used to distinguish as to whether two images are of the same class or not.

Traditional neural networks learn to predict classes; this is a problem when it is necessary to add/remove a class. Once a SNN is trained, it can be used to categorize data to classes that the model has never “seen”

SNNs can be used for K-Shot Classification - Generally speaking, k-shot Image Classification aims to train classifiers with only k-image(s) per class.

SNNs are more robust to class imbalance when compared to traditional Deep Learning techniques.

SNNs could be implemented as a Feature Extractor and other ML mechanisms could be mounted onto the neural network.

Disadvantages

The final output from a SNN is a similarity value - not a probability.

The neural network’s architecture is more complicated. Dependent on the loss function that is used, a custom training loop might have to be created (assuming that there is not a code repository available that one can take advantage of) to enable the loss to be computed and Backpropagated. If the SNN is to be treated as a Feature Extractor then additional ML mechanisms will need to be added e.g., Fully/Densely Connected layer(s), Hard/Soft Support Vector Machine(s), etc.

Requires more training time when compared to traditional neural networks architectures because a large number of training sample combinations, necessary for the SNN’s learning mechanism, are required to produce an accurate model

Vevesta: Your Machine Learning Team’s Feature and Technique Repository - Accelerate your Machine learning project by using features, techniques and projects used by your peers

100 early birds who login into Vevesta will get free subscription for lifetime.

References:

Similarity learning with Siamese Networks | What is Siamese Networks (mygreatlearning.com)

This article was originally published at https://www.vevesta.com/blog/35-Siamese-Neural-Networks