Here is what you need to know about Sparse Categorical Cross Entropy in nutshell

Insights into often encountered errors while using Sparse categorical cross entropy

Working with Machine learning and Deep learning models involve usage of cost functions which are there to optimize the model during the training. Better is the model, the lower will be the loss. One of the most used cost function for classification based problem statement is Cross-Entropy. Lets have a deeper dig into it.

Cross-Entropy Loss Function

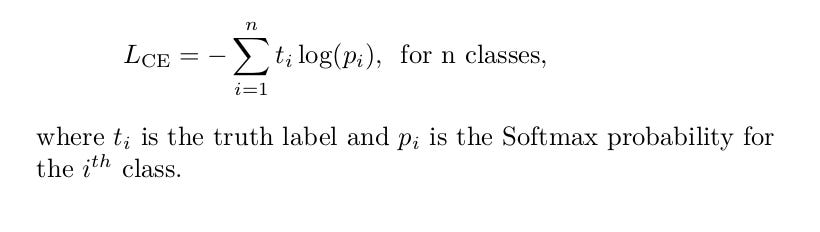

Cross-Entropy Loss is also known as logarithmic loss, log loss or logistic loss. Each probability of the predicted class is compared with the actual class and loss is calculated which penalizes the probability based on how far it is from the actual expected value. The penalty is logarithmic in nature yielding a large score for large differences close to 1 and small score for small differences tending to 0. A perfect model has a cross-entropy loss of 0.

Cross-entropy is defined as

Categorical Cross-Entropy and Sparse Categorical Cross-Entropy

Both categorical cross entropy and sparse categorical cross-entropy have the same loss function as defined above. The only difference between the two is on how labels are defined.

Categorical cross-entropy is used when we have to deal with the labels that are one-hot encoded, for example, we have the following values for 3-class classification problem

[1,0,0],[0,1,0]and[0,0,1].In sparse categorical cross-entropy , labels are integer encoded, for example,

[1],[2]and[3]for 3-class problem.

Implementation Nuggets when using Tensorflow

Some of the following nuggets have been collected from experience of data scientists [3,4,5] facing errors while using sparse categorical entropy:

A lot of people have dilemma that in some cases tf.keras.losses.categorical_crossentropy() do not yield the expected output but why this is so? Actually

tf.keras.losses.categorical_crossentropy()applies a small offset (1e-7) toy_predwhen it's equal to one or zero, that's why in some cases we are unable to get the expected output. Similar to this is theSparseCategoricalCrossentropy()loss function also applies a small offset (1e-7) to the predicted probabilities in order to make sure that the loss values are always finite.Sparse Categorical Crossentropy is more efficient when you have a lot of categories or labels which would consume huge amount of RAM if one-hot encoded.

Sparse categorical Cross Entropy has two arguments namely, from_logits and reduction. Reduction can be set to ‘auto’ or ‘none’. It is normally set to 'auto', which computes the categorical cross-entropy which is the average of label*log(pred). But it can be set to value 'none' will actually give you each element of the categorical cross-entropy, label*log(pred), which is of shape (batch_size). Computing a reduce_mean on this list will give you the same result as with reduction='auto' [3].

# With `reduction='none'` loss_obj_scc_red = tf.keras.losses.SparseCategoricalCrossentropy( from_logits=True, reduction='none') loss_from_scc_red = loss_obj_scc_red( labels_sparse, model_predictions, ) print(loss_from_scc_red, tf.math.reduce_mean(loss_from_scc_red)) >> (<tf.Tensor: shape=(4,), dtype=float32, numpy=array([0.31326166, 1.3132616 , 1.3132616 , 0.31326166], dtype=float32)>, <tf.Tensor: shape=(), dtype=float32, numpy=0.8132617>)When one is explicitly using softmax (or sigmoid) function, then, for the classification task, then there is a default option in TensorFlow loss function i.e. from_logits=False. So here TensorFlow assumes that whatever the input that you will be feeding to the loss function are the probabilities, so there is no need to apply the softmax function [4].

# By default from_logits=False loss_taking_prob = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False) loss_1 = loss_taking_prob(y_true, nn_output_after_softmax) print(loss_1) tf.Tensor(0.25469932, shape=(), dtype=float32)When one does not intend to use the softmax function separately but would prefer including it in the calculation of the loss function. Here you are letting TensorFlow perform the softmax operation for you. This means that inputs provided to the loss function are not scaled i.e inputs are can range from -inf to +inf and are not the probabilities [4].

loss_taking_logits = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True) loss_2 = loss_taking_logits(y_true, nn_output_before_softmax) print(loss_2) tf.Tensor(0.2546992, shape=(), dtype=float32)Sparse categorical cross entropy is suited for problems where y label is set to 1. It doesn’t work for multi-label problems. On the other hand, categorical cross entropy works well for multi-label problems or problems where targets that are not one-hot, but the values just need to be between 0 and 1 and sum to 1, i.e. any probability distribution. This is a use case that often tends to get forgotten [5].

The above article is sponsored by Vevesta.

Vevesta: Your Machine Learning Team’s Collective Wiki: Identify and use relevant machine learning projects, features and techniques.

100 early birds who login into Vevesta will get free subscription for 3 months

References

This blog has been originally published at https://www.vevesta.com/blog/27-Sparse-Categorical-Cross-Entropy