Deep Dive into Reasons to Choose Focal Loss over Cross-Entropy

Learn about how to solve class imbalance problem using Focal Loss over Cross-Entropy

The loss function, as stated in Focal Loss for Dense Object Detection, is a dynamically scaled cross entropy loss, where the scaling factor decays to zero as confidence in the correct class increases.

Cross-entropy is basically penalizing the model based on how far our prediction is from the true label. The cross-entropy loss value for any sample does not change based on the class (i.e., class-agnostic) and only depends on the predicted probability p. To put it simpler words, cross-entropy loss gives equal importance (or weightage) to the classes and is a function of only the predicted probability p.

Now, coming back to object detectors, they can be broadly classified into two categories, two-staged and one-staged.

Two-Stage Approach:

The dominant paradigm in modern object detection is based on a two-stage approach such as Region-based CNN (R-CNN) and its successors. The first stage generates a sparse set of candidate proposals that should contain all objects while filtering out the majority of negative locations, while the second stage classifies the proposals into foreground classes / background. R-CNN enhanced the second-stage classifier to a convolutional network yielding large gains in accuracy and steering into the modern era of object detection. Region Proposal Networks (RPN) integrated proposal generation with the second-stage classifier into a single convolution network, forming the Faster R-CNN framework.

One-Stage Approach:

One-staged detectors, such as the YOLO family of detectors and SSD, have a single convolution network that is responsible for detection. They usually divide the input image into grid cells which are then used to generate bounding boxes. Both classic one-stage object detection methods, like boosted detectors and DPMs, and more recent methods, like SSD, face a large class imbalance during training.

In simple words, Focal Loss (FL) is an improved version of Cross-Entropy Loss (CE) that addresses class imbalance during training in tasks like object detection. Focal loss applies a modulating term to the cross-entropy loss in order to focus learning on hard misclassified examples i.e., background with noisy texture or partial object or the object of our interest and to down-weight easy examples i.e., background objects. So Focal Loss reduces the loss contribution from easy examples and increases the importance of correcting misclassified examples.

The Class-Imbalance Problem

The class imbalance problem affects an object detection model in the following two ways:

a) Inefficient training due to easy negatives

If you build a neural network and train it for a bit, it will quickly learn to classify the negatives at a basic level. From this point on, most of the training examples will not do much to improve the model’s performance because the model is already doing a decent job overall. This makes the training inefficient because most locations in the image are easy negatives (i.e. — they can be easily classified by the detector as background) and hence contribute no useful learning.

b) Degenerate models formed due to easy negatives

When small losses from easy negatives are summed over many images, it overwhelms the overall total loss, giving rise to degenerated models. Easily classified negatives comprise the majority of the loss and dominate the gradient. This shifts the model’s focus on getting only the negatives examples classified correctly.

Focal Loss

The Focal Loss is designed to address the one-stage object detection scenario in which there is an extreme imbalance between foreground and background classes during training (e.g., 1:1000). We introduce the focal loss starting from the cross entropy (CE) loss for binary classification:

In the above y ∈ {±1} specifies the ground-truth class and p ∈ [0, 1] is the model’s estimated probability for the class with label y = 1. For notational convenience, we define pt:

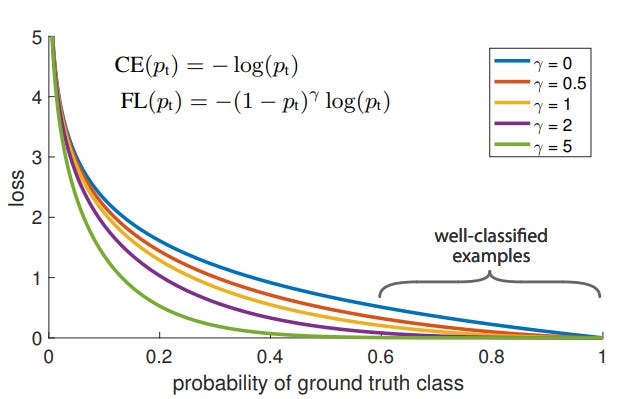

and rewrite CE(p, y) = CE(pt) = − log(pt). The CE loss can be seen as the blue (top) curve. When summed over a large number of easy examples, these small loss values i.e. (pt >> .5) can overwhelm the rare class even though being non-trivial in magnitude.

A common method for addressing class imbalance is to introduce a weighting factor α ∈ [0, 1] for class 1 and 1−α for class −1. For notational convenience, we define αt analogously to how we defined pt . We write the α-balanced CE loss as:

While α balances the importance of positive/negative examples, it does not differentiate between easy/hard examples. Instead, reshape the loss function to down-weight easy examples and thus focus training on hard negatives. More formally, add a modulating factor (1 − pt)^γ to the cross-entropy loss, with tunable focusing parameter γ ≥ 0. We define the focal loss as:

‘γ’ is called the focusing parameter. It is tunable and can be learned by the model in a same way as hyper parameters. The purpose of the focusing parameter is to lessen the contribution of the easy examples.

In practice, an α-balanced variant of the focal loss is used:

We adopt this form in our experiments as it yields slightly improved accuracy over the non-α-balanced form.

Class imbalance is identified as the primary obstacle preventing one-stage object detectors from surpassing top-performing, two-stage methods. To address this, focal loss is brought into the limelight which applies a modulating term to the cross-entropy loss in order to focus learning on hard negative examples. This approach is simple and highly effective. We demonstrate its efficacy by designing a fully convolutional one-stage detector and report extensive experimental analysis showing that it achieves state-of-the-art accuracy and speed.

Conclusion

The focal loss is designed to address the class imbalance by downweighing the easy examples such that their contribution to the total loss is small even if their number is large.

Focal loss is used to address the issue of the class imbalance problem. A modulation term applied to the Cross-Entropy loss function, make it efficient and easy to learn for hard examples which were prevailing in One-Shot Object Detectors.

Subscribe to receive a copy of our newsletter directly delivered to your inbox.

The above article is sponsored by Vevesta.

Vevesta: Your Machine Learning Team’s Collective Wiki: Identify and use relevant machine learning projects, features and techniques.

100 early birds who login into Vevesta will get free subscription for 3 months