Deep dive into causes of overfitting and how best to prevent it ?

Quick overview of methods used to handle overfitting in Shallow and Deep Neural Network

Overfitting is due to the fact that the model is complex, only memorizes the training data with limited generalizability and cannot correctly recognize different unseen data.

According to authors, reasons for overfitting are as follows:

Noise of the training samples,

Lack of training samples (under-sampled training data),

Biased or disproportionate training samples,

Non-negligible variance of the estimation errors,

Multiple patterns with different non-linearity levels that need different learning models,

Biased predictions using different selections of variables,

Stopping training procedure before convergence or dropping in a local minimum,

Different distributions for training and testing samples.

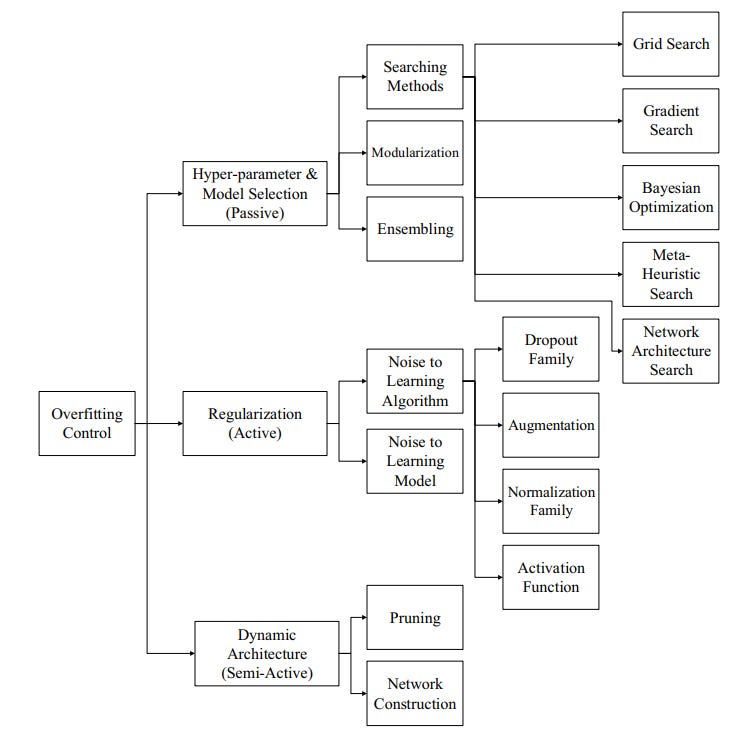

Methods to handle overfitting:

Passive Schemes : Methods meant to search for suitable configuration of the model/network and are some times called as Model selection techniques or hyper-parameter optimization techniques.

Active Schemes : Also, referred as regularization techniques, this method introduces dynamic noise during model training time.

Semi - Active Schemes: In this methodology, the network is changed during the training time. The same is achieved either by network pruning during training or addition of hidden units during the training.

Subscribe to receive a copy of our newsletter directly delivered to your inbox.

The above article is sponsored by Vevesta.

Vevesta: Your Machine Learning Team’s Collective Wiki: Identify and use relevant machine learning projects, features and techniques.

100 early birds who login into Vevesta will get free subscription for 3 months